Last week, the New York Times published a long article on the never-ending task of moderating Facebook. Sourced from extensive documents and PowerPoint slides, many of which had previously been reported on by Motherboard, the documents further illuminate the impossible mandate that Facebook has given itself: to create a single set of rules governing more than two billion users across the globe, encompassing drastically different cultural, racial, sexual, political, and religious demographics.

Facebook moderation is something of a hot-button issue, to put it mildly. In the wake of the 2016 election, the company was widely criticized for letting misinformation run rampant throughout the network. More recently, its executives, and those of other tech companies, have appeared before Congress to be chastised for supposed “anti-conservative” bias (which does not exist). For years, users have posted anecdotes of overly aggressive moderation, and leaked documents have featured tone-deaf examples of what sorts of things are and are not “protected” categories (most infamously, “white men” was a protected category but “black children” was not).

The point is, it’s tough. That was the thrust of the Times piece, which ominously described “Facebook’s Secret Rulebook for Global Political Speech” in its headline. The Times piece is the latest exploration of a well-established problem: Given the mandate to moderate its platform, Facebook becomes a de facto global censor. As former Facebook security head Alex Stamos put it on Twitter, “I’m excited for the @nytimes, because they are so very close to a critical realization after years of ‘do more!’: When you tell a powerful organization to solve a societal problem, it will grant itself the powers and access to data necessary to do so. Then, it will likely fail.”

What Stamos is getting at here is the trade-off between moderation and privacy. Calling for Facebook to keep a closer eye on everything often involves asking the company to monitor things they probably shouldn’t be monitoring. The clearest example of this is encrypted messages sent between users, which can only be read by the senders and recipients. Facebook, as a go-between, cannot monitor those messages either via employees or automated procedures. In exchange for the privacy of encrypted messaging, Facebook and its users allow the possibility that people might say and do objectionable things in those messages. Similarly, consider a Facebook post that’s shared within a private group or with a select audience — the intended viewers provide a lot of necessary context.

There’s a bit of media criticism in Stamos’s response to the article, as well. “I am glad to see the Times look into the challenges of content moderation at billion-user scale,” he tweeted, “because perhaps they will start to understand that every time they write ‘We saw this content on social media and don’t like it’ the response is the creation of one of thos [sic] PPT decks.” In other words, you can’t really solve the problem of Facebook moderation piecemeal and anecdotally.

He concluded, “If we ask tech companies to fix ancient societal ills that are now reflected online with moderation, then we will end up with huge, democratically-unaccountable organizations controlling our lives in ways we never intended.”

I mean, sure. I think the big assumption here is that anyone is asking tech companies to fix “ancient societal ills.” I can’t speak for all 2 billion-plus users, but I don’t think that’s what users want out of Facebook. I think the company’s critics mostly want to be able to communicate with friends without running into harassment, misinformation, or hate speech. Fixing “ancient societal ills” is more of a mission Mark Zuckerberg gave himself to combat criticism, make Facebook seem benevolent, and turn the task of selling targeted ads into a noble mission.

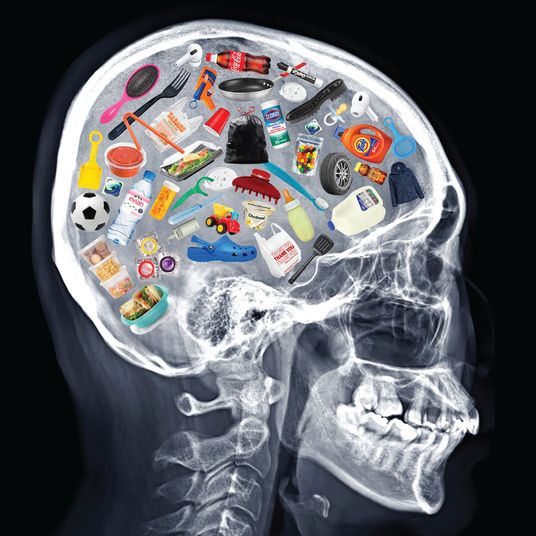

Clearly, coming up with a single set of rules for the globe and then asking low-paid workers to arbitrate disputes within seconds is not tenable. Nor is asking Facebook to monitor every single type of communication medium it offers to users. The real problem is the structure — the centralization of every person and community under the Facebook banner, using an algorithmically sorted feed to show people what Facebook thinks they want to see instead of what they actually want to see.

What Facebook needs to introduce, more than clearer rules or more consistent enforcement, is friction. To an extent, Facebook brought many of these problems on itself by making public posting the default setting on its network, and by creating a revenue system that allows and encourages people to cross-pollinate their stuff so that other users can see it. Because the primary metric for Facebook is engagement — how much users interact with the service — the company built a system that boosted content that kept users engaged. Oftentimes that content was inflammatory. (YouTube faces the same type of problem.) Additionally, Facebook built sharing systems that allowed users to post far and wide without really considering their actions. It eliminated friction, which is great for engagement, but allows users to move faster than their common sense. “Is this status too mean?” “Is this news reliable?” Who cares, it’s already posted.

Facebook’s recent emphasis on Groups, which often set and enforce their own internal community standards, is a means of introducing such friction, a canny way of allowing users to set tailored guidelines. (Some writers have argued that Facebook’s prioritization of Groups has led to just as much unrest — in the case of the “gilets jaunes,” for example — but even granting that argument, the key problem remains that inflammatory content from Groups is pushed to the News Feed.) The company also recently announced that it would introduce an independent body for adjudication. Facebook is doing this with its other products, too. This past summer, to combat viral misinformation on WhatsApp, Facebook announced that it was limiting how many groups a user could forward messages to at once, and removed a “quick forward” button for multimedia. The idea is that by requiring more labor and intentionality on the part of the user, the spread of inaccuracies could be slowed down.

These are the types of structural changes and added features that might help Facebook resolve some of its issues without having to play judge, jury, and executioner. The problem is that they all fly in the face of what its central product, the News Feed, is. The Feed is supposed to be centralized and governed by Facebook, it is supposed to show you targeted ads you haven’t requested, and it is supposed to let users broadcast their ideas — however stupid they may be — to as broad an audience as possible, as easily as possible. For Facebook to strike this mythical balance between collective safety and individual liberty, it needs to change both the technical features of its sprawling system and its incentive structure for users. When critics, as Stamos put it, say “Do more!”, that doesn’t just mean that Facebook needs to take on more responsibility for itself. It means that Facebook should reflect on how its systems encourage and exacerbate bad behavior, and then change (or abandon!) those very systems, even if it means surrendering some power and money in the process.