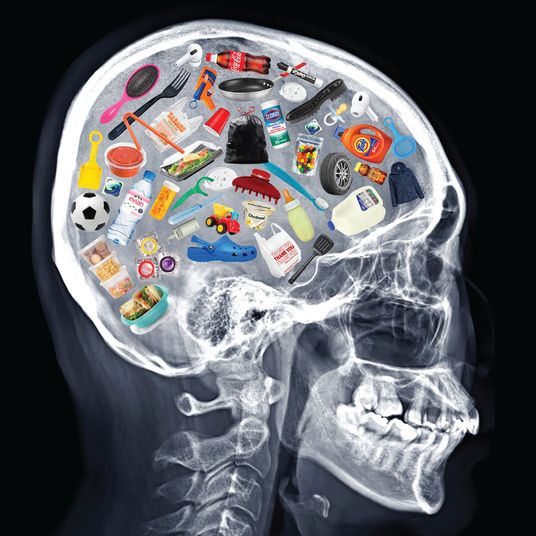

Do you know just how detailed a demographic and psychographic profile Facebook has of you? Per a new Pew Research report, odds are you don’t — and if you found out, odds are you’d be uncomfortable with just how much Facebook knows.

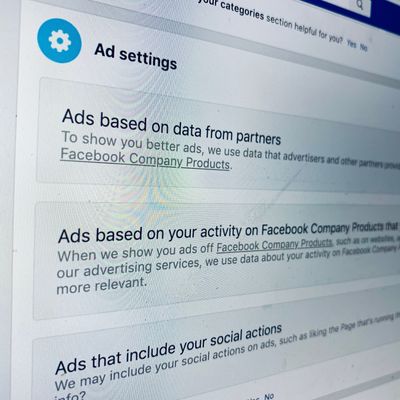

Pew surveyed of 963 Facebook users, asking them if they understood how the site uses its algorithms to determine their demographic information about users — not just their broad demographic information, but their political leanings, major life events, food preferences, hobbies, their choices in entertainment, and what digital devices they use. Pew then showed survey takers their “ad preferences” page and asked them how accurate they thought the listings were, and how comfortable they were with the information displayed.

Pew found that Facebook had detailed information about 88 percent of the people surveyed listed on its ad preferences page, and that 74 percent of these people were unaware that Facebook had this information before being directed to the ad preferences page by the survey. Once they saw the information Facebook had about them, roughly half of users — 51 percent — said they were uncomfortable with it.

When it came to politics, 51 percent of those surveyed had been assigned a political “affinity” by Facebook. Of those people, 73 percent said Facebook was describing their politics very or somewhat accurately, while 27 percent said Facebook wasn’t describing their political views accurately.

Pew also delved into Facebook’s use of “multicultural affinity.” Facebook doesn’t categorize people by race, but it does categorize them by “affinity” with a racial or ethnic group. (Notably absent from the possible “multicultural affinity” groups: white people, perhaps because Facebook and its advertisers assume that being white is the default.)

While only 21 percent of Facebook users were listed as having a “multicultural affinity,” 60 percent of users put into a multicultural affinity category said they did have a very or somewhat strong affinity for the group to which they had been assigned, compared to the 37 percent who said their affinity for that group was not particularly strong. Meanwhile, 57 percent of those tagged with a “multicultural affinity” said they consider themselves to be members of the racial or ethnic group described.

This “multicultural affinity” ad-targeting technique has gotten Facebook in trouble in the past — a ProPublica investigation found that it is possible for real estate advertisers to exclude people with “multicultural affinities,” effectively creating ads meant to be seen only by white people and violating the federal Fair Housing act. As Pew’s own survey data shows, Facebook does a somewhat reasonable job of sorting people by race without saying outright that they are sorting people by race, which leads to all sorts of troubling possibilities when it comes to which ads are shown to who.

While Pew focused its research on Facebook because the size and scale of the social network are simply incomparable, other networks like Twitter or Instagram use many of the same basic techniques to classify their users and help advertisers target certain classes of them. (Instagram, which is owned by Facebook, uses the same ad-targeting backend as Facebook itself — once advertisers determine what group they want to micro-target, they can just as easily do it on Instagram as on Facebook.)

You can opt out of the personalized advertising on Facebook and other social networks, but the truth is that if you use the internet with any regularity and don’t also take steps significant steps to obscure your identity, advertisers are able to target you with pretty unnerving specificity regardless, determining things like your age, religion, and marital status as well as things such as likelihood “to suffer from overactive bladder.”

Your ostensible anonymity on the internet is one of the basic illusions that undergirds billions of dollars of economic activity. Data brokers aggregate data about you based on what sites you visit, what you buy, how often you use a credit card, and the paper trail you leave from getting married or buying a car or changing addresses, and bundle this information to sell to other firms that help target ads to you. It’s a multibillion dollar business that shows no signs of slowing down. (One estimate from the Financial Times says data brokers will earn about $120 billion per year in Europe alone by 2020.)

Some consumer advocacy groups are now beginning to test out whether the EU’s General Data Protection Regulation act will stop data brokers and others who gather information without explicit consent, but there’s little hope that similar regulation will come in the United States in the near future. And in the case of Facebook and other social networks, you’ve actually already given your consent for social networks to gather all of this information about your. Sure, it’s buried deep in pages about terms of service and privacy that these companies know you will never read, and much of their business model relies on allowing advertisers to micro-target their users. But as Pew’s data shows, most of us are unaware of just how detailed this information is, and are uncomfortable with it once we discover just how fine-grained a picture advertisers can build of us. Less clear — and unasked in Pew’s survey — is whether this would lead anyone to actually stop using Facebook.

Update: We received this statement from Joe Osborne, a Facebook spokesperson: “We want people to understand how our ad settings and controls work. That means better ads for people. While we and the rest of the online ad industry need to do more to educate people on how interest-based advertising works and how we protect people’s information, we welcome conversations about transparency and control.”