Amazon, IBM, and Microsoft made moves this week to limit the use of their facial-recognition products, an acknowledgment of the flaws in the technology and the potential for their misuse, especially in ways that harm people of color. On the latest Pivot podcast, Kara Swisher and Scott Galloway discuss the racial-bias problems with facial-recognition technology, tech companies’ responsibility for those issues, and a surprisingly relevant 1996 Pam Anderson movie.

Kara Swisher: Amazon and IBM are ending their facial-recognition-technology products. In a letter written to Congress this week, IBM’s CEO, Arvind Krishna, wrote that the company would no longer be offering images and technology to law enforcement and would support efforts in police reform and more responsible use of the technology. There have been several studies showing that facial-recognition technologies are biased against people with black and brown skin and can cause harm when used by law enforcement. Later in the week, Amazon released a statement saying it would be implementing a one-year moratorium on police use of its facial-recognition technology. Amazon also called on Congress to make stronger regulations. Law-enforcement agencies around the country contract with Clearview AI, a start-up that scrapes images posted around the internet to identify people from sources like security videos. You know, I did this long interview with Andy Jassy of Amazon Web Services, and I was pressing him on this very issue, and he seemed to be like, “Nothing to see here.” Now what what do you imagine’s happening, Scott?

Scott Galloway: I think Amazon — I think a lot of big tech — has seen many of these issues recently and the attention being placed on these issues as an opportunity for redemption. And I think that they look at the economic upside versus the opportunity. I think they look at it through a shareholder lens, and they say, “What is the upside here of facial-recognition technology, as it relates to our shareholder growth versus our ability to basically starch our hat white?” I think when Tim Cook says that privacy is a basic human right, he may believe that. But he’s also de-positioning his competitors, Facebook and Google, who are totally focused on molesting your data as core to their business model.

Subscribe on:

Swisher: Can you move away from “molest”? But keep going.

Galloway: And the question is — I am asking this to learn, because I know you’re very concerned about this — but if law enforcement can use your DNA or forensics, why shouldn’t it be allowed to use facial-recognition technology?

Swisher: It’s not that law enforcement shouldn’t be allowed to use it. I think DNA was really bad for a long time, and you saw those people who were convicted and then later exonerated. I mean, I think it’s in a stage where it just doesn’t work right —

Galloway: Because it’s error-filled.

Swisher: It’s error-prone. And I think it may not be error-prone going out the door, but then law enforcement agencies use it badly. And because it’s a question of life or death, it has to be perfect or almost nearly perfect.

Galloway: Well, witnesses aren’t perfect.

Swisher: No, but this should be.

Galloway: What part of the prosecutorial process is perfect?

Swisher: Come on. This isn’t like witnesses. This is giving people technology that people can act on and make bad decisions about. This is like their car is blowing up. Like they’re, “Oops” — like that kind of stuff. Or their guns not firing correctly or whatever.

Galloway: I have no idea what that means. Your car blowing up or your gun not firing?

Swisher: I’m just saying a lot of their equipment is supposed to work and their technology is supposed to work. They should use almost no guns anymore. I think a lot of people are sort of sick of their use of guns. But when they buy any equipment, it needs to work. And this is equipment and technology. I think Amazon’s sort of shoving the ball to Congress. Now there should be, definitely in this area, national legislation. Of course, now, it’s being piecemeal. San Francisco will ban it, and another area doesn’t. And so I think they have to think this is a national discussion.

I interviewed the guy who does most of the body cams on police, and he doesn’t want facial recognition in there. He doesn’t think it’s ready for prime time. These are people who are in the business and understand how quickly it can be abused, or not abused as much as badly used. And so it’s interesting that they did this one-year moratorium. And why now? After being harangued by me and many others way before me, why did they decide to do it now? And you’re right; it’s this waiting for the protests to die down or just “It looks good in a press release.” I don’t know. I’d like to know why they made the decision now. I’d like to see what the decision-making process was. It would be nice for transparency.

Galloway: I think there’s a deeper issue. And it goes to these bailouts, which I think are going to underline one of the core problems here, and that is a loss of trust in our institutions and our government. Because you talked about DNA being wrong. DNA has also corrected the record and freed a lot of inmates who were incorrectly prosecuted.

Swisher: Yes.

Galloway: So science, I think, is a wonderful thing, both in terms of crime prevention, prosecution, and also exonerating people who were wrongly accused and sometimes jailed for decades. So I get that we have to be careful around saying that because it’s science, it’s binary, that it’s a hundred percent, when it isn’t. But I think it goes to this notion that people are losing faith in our institutions because the people running our institutions or our elected leaders are quite frankly undermining them.

You know, when you have Bill Barr, the attorney general, the head of the DOJ, say that there’s evidence of all these far-left groups. And then the data comes out — and this hasn’t gotten enough oxygen — the data is showing people who have been prosecuted, arrested and prosecuted, for really sowing violence and destruction at these protests, most of them don’t have any affiliation. And the only ones that they could find that were affiliated with any group were affiliated with far-right groups.

Swisher: Yeah, that’s right.

Galloway: And when you have elected leaders undermining and overrunning your institutions, we begin to lose faith in our institutions and say, “We just don’t trust them to handle any sort of science.” And it’s a shame because science is an incredible tool for both people who should be prosecuted and people who should not be prosecuted. It can also declare people’s innocence.

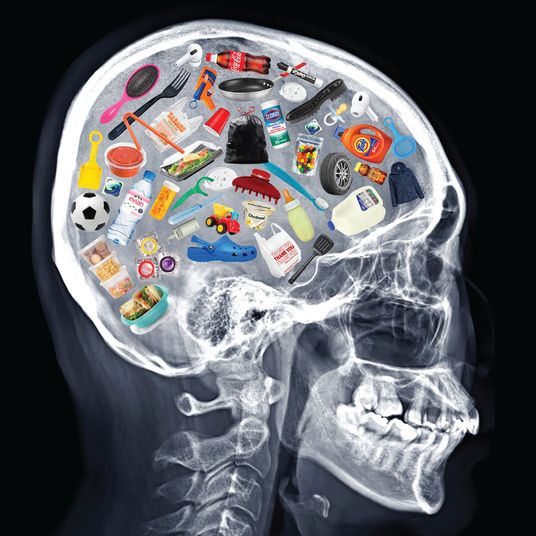

Swisher: Yes, I agree. But I think facial-recognition technology shouldn’t be made so badly that it can’t correctly recognize people of color. They’re putting stuff out the door that doesn’t work on all citizens. And especially when people of color are at such risk of being misidentified, they cannot get this wrong. They cannot. The fact that they let a product out the door that does this when used — they need to anticipate their products. And again, Scott, I don’t think they need to anticipate every problem, but boy should it work on everybody’s faces and people of color. Same thing with AI. Boy should the data that’s going in not be data that creates the same problems.

I think my issue with Amazon is that it’s like, “Well, let Congress …” It’s always like, “Let Congress do this.” I’m like, “Why don’t you put out technology that doesn’t appear to be so flawed?” And Amazon tended to point the finger at police at the time. “If you don’t use it this way, it won’t work” kind of stuff. But why does it always not work that way and put people who are already at risk in general with police, with law enforcement, in even more risk or more problems that would lead to it? And you know, one of these is one too many. It’s interesting that IBM moved in here because IBM’s not a big player here. So it was sort of — I think you call it “virtue signaling,” because it’s not a player. But Amazon certainly is the most important player in this area. Although there are several different players here.

Galloway: Yeah. I just love, just from pure selfishness, biometrics. I don’t have shoes with shoelaces. I purposely try never to have passwords on anything, which I realize makes me a target. And I don’t have keys. And I love the idea of a biometric world where it recognizes your face, your fingerprint, for access to everything. I think people spend so much time and it’s such a hassle, this false sense of security. I’ve never understood locks. If somebody wants to get into your house, they’re going to get in.

Swisher: Yes, indeed.

Galloway: I just never understood it.

Swisher: I agree. Well, but biometrics can be abused. You know what I mean?

Galloway: Yup.

Swisher: And of course, I have to say you don’t think this way when you …

Galloway: Right. Because I have the privilege of being a man that doesn’t feel unsafe.

Swisher: Right.

Galloway: A hundred percent, I get to walk around with a sense of security and that the majority of the population doesn’t have that luxury.

Swisher: Or even anticipating problems. There was one of my favorite movies; it’s called Barb Wire with Pamela Anderson.

Galloway: I like it already.

Swisher: It’s about biometrics. You need to sidle up to your giant couch and your beautiful home and watch this movie. It is about the future where they look at your eyeballs. It was way long time ago. I remember it riveting me. And there was eyeball trading in it. I don’t even really remember what was going on.

Galloway: Yeah. That was Minority Report.

Swisher: … This was before that. It was called Barb Wire. And she ran a bar and she was sort of like the Casablanca character. And then she ends up being good. You know, she’s like, “Eh, just take my … I’ll take your money” and this and that. But, and then she ends up helping the rebels or whatever the version of that is.

Galloway: She’s a deeply misunderstood artist, Pamela Anderson.

Swisher: I have to say I’ve watched Barb Wire so many times. I can’t believe I’ve spent my life watching it.

Galloway: She’s Canadian, Pam Anderson.

Swisher: Okay. I have no information about her. But anyway, I do imagine a world where it could be woefully misused, and I know there’s all kinds of points of information, but biometric takes it to a DNA. I was an early user to Clear; I signed up when Steve Brill started it. And I never thought at the time — I remember going down there to take the picture, which is still in the system, which is super-old. And I was really fascinated by it more than worried about it at the time. Now, I’m like …

Galloway: I love Clear. Don’t you love Clear?

Swisher: I do. But when it started to get bought and sold, they had some financial troubles and everything. And so when that happened, I was like, Oh goodness, they have my … I thought, Well, I’m done. I’m in Barb Wire now because they have my eyeballs.

Galloway: I don’t think you can put technology back in a bottle. I don’t think that’s the answer. I think the answer is to have slow thinking, public institutions really think through how to regulate it. But I think the notion that we’re going to just kick the can down the road and stop investing in the technology or not understand it as well, I don’t know if that works. I worry the bad actors don’t seize their investment in it and use it for less benign purposes. But I would love Clear to run my life. I think it does a great job.

The dark side of Clear is it’s the further “caste-ing” of our society, where if you don’t have money, if you can’t afford business class; if you don’t fly a lot, you end up waiting in line for three hours at an airport. And then if you’re 1K status, you get this line. And then finally, if you’re Clear and you have an American Express card, you get to your plane in two minutes versus two hours. It’s more and more segmentation of our society based on wealth, which is one of the attributes of a capitalist society. But it feels like it’s getting out of control.

Pivot is produced by Rebecca Sananes. Erica Anderson is the executive producer.

This transcript has been edited for length and clarity.