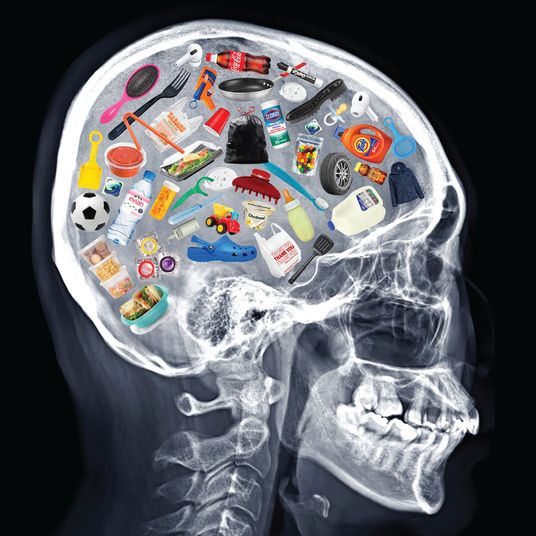

Over the past 15 years, the mental health of young people in the United States has rapidly deteriorated. Between 2007 and 2018, the suicide rate among Americans ages 10 to 24 increased by nearly 60 percent. After declining over the ensuing two years, youth suicides rose again in 2021.

For every American who takes their own life, many more suffer from nonfatal forms of mental distress. The rate of major depressive episodes among U.S. adolescents increased by more than 52 percent between 2005 and 2017. The number of teen suicide attempts in the U.S., meanwhile, increased dramatically between 2019 and 2021.

Young men are more likely to kill themselves than young women are, but that gap has narrowed in recent years as the surge in psychological distress has disproportionately affected young women. Among teen girls, suicide attempts rose 51 percent between 2019 and 2021, compared with 4 percent for teen boys.

In a recent survey by the Centers for Disease Control and Prevention, the prevalence of persistent sadness among teenage girls in the U.S. hit an all-time high. Nearly 60 percent of such adolescents reported feeling sadness every day for at least two weeks during the previous year, twice the rate among boys. Most alarming, one in three teen girls had considered taking their own lives.

There is no expert consensus about the causes of this mental-health crisis. One prominent theory blames social media. There was a sudden jump in rates of depression, anxiety, and self-harm among adolescents around 2012, shortly after daily social-media use became pervasive among teens. As the social psychologist Jonathan Haidt has put it, “Only one suspect was in the right place at the right time to account for this sudden change: social media.”

A number of studies and experiments have produced evidence of a correlation between high levels of social-media use and mental distress. Still, other research has contested that connection. And while social-media use has skyrocketed in virtually every nation over the past decade, not every country has seen rising rates of adolescent mental illness. Faced with these complexities, many experts have insisted it is too early to reach strong conclusions about the roots of America’s adolescent mental-health crisis.

But some prominent commentators disagree.

Earlier this week, the Washington Post’s Taylor Lorenz suggested that the sources of this despair are plain to see: The world is simply much more stressful, insecure, and dark today than it was when previous generations were teenagers. Young people who feel there is no hope for them aren’t suffering from depressive delusions, in Lorenz’s telling, as much as oppressive clarity.

“People are like ‘why are kids so depressed it must be their PHONES!’ But never mention the fact that we’re living in a late stage capitalist hellscape during an ongoing deadly pandemic [with] record wealth inequality, 0 social safety net/job security, as climate change cooks the world,” Lorenz wrote on Twitter. “Not to be a doomer but u have to be delusional to look at life in our country [right now] and have any [amount] of hope or optimism.”

The columnist went on to suggest that the young people of the 1950s enjoyed objectively superior material prospects than young people do today.

Fortunately, Lorenz’s claims range from dubious to simply false. Whatever caused young people in the U.S. to develop higher rates of mental illness over the past two decades, it was not an objective collapse in living standards, employment prospects, or opportunities for material security. Indeed, Lorenz’s tweets do more to buttress Haidt’s hypothesis than her own; the fact that her ill-substantiated catastrophism performs so well on social media lends credence to the notion that Twitter and TikTok are vectors of depressive thought.

Teen mental health has gotten worse even as the economy has improved.

Lorenz isn’t wrong to suggest that economic conditions can influence the prevalence of mental distress. Unemployment and poverty are both major risk factors for depression. Recessions are associated with elevated rates of suicide. And there is some evidence that robust social-welfare states improve mental health by reducing material insecurity. It is therefore reasonable to suspect that America’s relatively stingy social safety net and elevated level of child poverty are implicated in its youth mental-health crisis.

However, these factors do not constitute a sufficient explanation for that crisis. Whether the contemporary U.S. qualifies as a “hellscape” is inherently subjective. If today’s America is a neoliberal Hades, however, then this was even more true of our country circa 2009. That year, the U.S. unemployment rate hit double digits; today, it sits at its lowest level in half a century. As rates of adolescent depression and suicide rose between the Great Recession and 2019, labor-market conditions massively improved. Over the same period, the U.S. welfare state grew, with 21 million Americans gaining access to public health insurance through the Medicaid expansion.

Separately over the past several years, humanity’s prospects for averting catastrophic climate change have dramatically improved. As of 2016, climate scientists projected that a “business as usual” scenario — which is to say, a scenario in which the world remained on its existing emissions trajectory — could result in five degrees of warming, enough to render much of the planet uninhabitable. Today, that worst-case scenario is expected to yield three degrees of warming. If the United States, China, and the European Union successfully implement their existing decarbonization plans, the ceiling on future warming will fall further.

Still, the U.S. political economy remains obscenely inequitable, its safety net exceptionally thin, and the climate crisis remains menacing. The probability of worldwide calamity may be falling, but it is far from negligible; we don’t know with certainty precisely how much warming it will take to trigger disastrous feedback loops.

Thus it is possible that the social pathologies cited by Lorenz are preconditions for today’s mental-health crisis. If the U.S. had developed into a Nordic-style social democracy after World War II and the global economy had been decarbonized in the early 1990s, perhaps contemporary rates of teen depression and suicide would be radically lower.

If inequality, inadequate social-welfare provisions, and climate change were necessary for bringing about the present crisis, however, they were plainly insufficient. Since rates of adolescent mental distress began rising early in the previous decade, Americans’ employment prospects dramatically improved while their access to health care grew more secure. Even the climate outlook has not been continuously worsening. And since most of the increase in teen mental illness preceded the pandemic, COVID does not suffice to explain it. Something else must have changed beyond the objective bleakness of the economic and ecological landscapes.

Zoomers shouldn’t want to party like it’s 1958.

More broadly, Lorenz’s theory that American teens are historically sad because their economic prospects are historically unfavorable rests on a hallucinatory nostalgia for an economic past that never existed.

Many aspects of the U.S. postwar political economy are worth mourning. Relative to today, the 1950s and ’60s saw higher rates of economic growth and a more equitable distribution of income between capital and labor.

Nevertheless, Americans entering the labor force today can expect to earn a much better living than their predecessors in the 1950s. Between 1955 and today, the median household income in the U.S. more than doubled in inflation-adjusted terms. Even after COVID and inflation, Americans’ living standards remain near historic peaks. And of course, as Lorenz herself acknowledges, for Black, LGBT, and female Americans, opportunities for economic advancement and personal liberty are exponentially more abundant today than they were in 1958. Further, contemporary U.S. teens enjoy far more insulation from the market’s cruelties than previous generations did. While austere relative to many of its western peers, the contemporary U.S. welfare state is robust compared with its past incarnations.

It is true that a select population of unionized male industrial workers enjoyed employment benefits in the postwar era that outstrip those presently standard in the labor market, including defined benefit pensions. But such workers never constituted anything remotely close to a majority of the labor force, and their livelihoods were nowhere near as secure as Lorenz suggests. Further, such workers endured far higher risks of injury and death on the job than the median worker in today’s economy. Owing to the Cold War, meanwhile, they lived under a much higher threat of sudden nuclear annihilation.

Among online progressives, there is sometimes a tendency to view any acknowledgment of progress as an apology for the status quo. But I think this has more to do with social media’s negativity bias (i.e., negative information tends to be more physiologically stimulating and thus viral) than any objective truth about the political implications of touting positive developments. The fact that Americans enjoy higher incomes than virtually ever before makes our failure to abolish child poverty, invest adequately in social goods like child and elder care, and provide robust mental-health services to our suffering adolescent population all the more damning. At the same time, the fact that we have managed to expand social welfare and reduce myriad forms of social inequity over the past few decades gives us reason to believe in the possibility of progressive change.

Human existence has never been easy. And in some respects, life in 2023 may be more challenging than in the past. Contemporary teens are more likely than their predecessors to lack the existential comfort offered by religious faith or the sense of communal solidarity provided by in-person civic groups. But it simply is not the case that Zoomers’ material prospects are much worse than previous generations’ (or, for that matter, that a human being’s level of depression reliably reflects their objective economic well-being).

In a great many respects, the world has been getting better. But kids have been getting sadder. Even as life has improved in a wide variety of ways since the 1950s, the teen suicide rate has risen substantially since that era. Explaining that requires more than reciting the millennial left’s (generally well founded) complaints about contemporary American society.

We should resist the temptation to use social crises as political cudgels.

By itself, the fact that a Washington Post columnist engaged in hyperbole on Twitter may not warrant comment. But I think Lorenz’s tweets reflect a broader tendency within the discourse to view novel social crises through a lens of ideological self-congratulation rather than intellectual curiosity. Among commentators, there is a strong incentive to abruptly enlist any new sign of social dysfunction into whatever fight you are already waging. I’ve surely done this myself, but it’s an impulse that should be resisted. We owe it to those suffering from any given social calamity to maintain curiosity about its causes. In failing to do so, we risk wielding tragedies as political props and seeing victims as metaphors instead of people.

This tendency has been especially conspicuous in the discourse around the opioid epidemic. Nearly 1 million Americans have died from a drug overdose since 1999. In recent years, the annual death toll has approached 100,000. In all this death, many pundits have glimpsed confirmation of their longstanding complaints with American life. The opioid epidemic has been variously blamed on America’s transformation into a “warp-speed, post-industrial world,” Wall Street’s investments in single-family housing, and the Biden administration’s contempt for white people.

Most prominently, the drug overdose epidemic has been framed as an index of the white working class’s social decline. Between 1999 and 2013, the death rate for white, middle-aged, working-class Americans increased by 22 percent, largely as a result of opioid overdoses. Over the same period, medical advances pushed down the death rates of college-educated whites, and the working-class members of other racial groups.

The economists Angus Deaton and Anne Case brought these disconcerting facts to national attention in 2015. Their research painted a portrait of a white working class besieged by “despair.” Erosion in the demographic’s wages, marriage rates, job quality, social cohesion, cultural capital, and, perhaps, racial privilege, were ostensibly driving an ever-larger number of non-college-educated whites into self-destructive behavior.

In the ensuing years, Donald Trump, and the journalists that covered his campaign, began telling a similar — but less nuanced — story. As the opioid crisis deepened in many white, rural areas, Trump (and some of his interpreters) began painting such “American carnage” as a symptom of the white working class’s singular economic dispossession.

But it’s now apparent that this narrative was largely wrong. In a 2018 study, the economist Christopher Ruhm collected county-level data on what Case and Deaton dubbed “deaths of despair” — drug overdoses, suicides, and alcohol-induced deaths. He then compared these against five measures of economic health in each county: rates of poverty and unemployment, the relative vulnerability of local industries to competition from foreign imports, median household income, and home prices.

He found that the correlation between economic hardship and “deaths of despair” was extremely weak. More economically depressed counties were only slightly more likely than prosperous ones to suffer from a large number of such deaths. And even this small discrepancy could plausibly be attributed to confounding variables.

Ruhm concluded that the opioid crisis was being driven less by demand than supply. Economic and social decline may have made favorable kindling for an overdose epidemic. But it was the explosive growth in the availability of opioids that started the fire.

This assessment implied that the white working-class was not uniquely vulnerable to opioid abuse due to its singular despair. You didn’t need to be a downwardly mobile white factory worker to become addicted to OxyContin. It’s an incredibly chemically addictive substance. And when the company that owned the patent to that substance began marketing it as a safe treatment for chronic pain in the 1990s — and doctors began prescribing the pills en masse — an increase in drug abuse and overdoses was almost certain to follow, regardless of America’s economic and social health.

Initially, Black Americans had been spared the brunt of the opioid epidemic, largely because racist white physicians had not taken their chronic pain seriously. Were that to change – or, alternatively, were the supply of illicit opioids to dramatically increase – Ruhm’s research suggested that the demographic character of the epidemic’s victims would change too.

And this is precisely what’s happened since his paper was published. When fentanyl displaced prescription pills as the opioid epidemic’s primary driver, Black Americans’ overdose death rate came to outstrip that of white Americans. Meanwhile, opioid deaths grew less geographically concentrated in lower-income states.

Longterm, structural pathologies in U.S. society aren’t irrelevant to the opioid crisis. But they now appear less relevant than comparatively contingent developments: If the Food and Drug Administration never allows Purdue Pharma to mass market Oxycontin as a safe treatment for chronic pain, the pharmaceutical phase of the opioid epidemic almost certainly doesn’t happen. After all, other Western countries have suffered from working-class wage stagnation and deindustrialization. But their doctors have prescribed far fewer opioids than American ones, and, not coincidentally, those nations have seen far fewer overdose deaths in recent years.

Nevertheless, pundits across the political spectrum have carried on citing the opioid crisis as a testament to whatever diffuse social pathologies they happen to be mad about. These arguments are not always necessarily wrong; it’s highly plausible that America’s fraying social ties and economic inequities have made our drug-overdose epidemic worse than it otherwise would have been. But too often, this commentary betrays a seeming indifference to factual reality, as when Tucker Carlson argues that the Biden administration doesn’t care about the opioid epidemic because its victims aren’t “from officially marginalized groups.”

Separately, if drug cartels had not become more adept at producing low-cost synthetic opioids in small, difficult-to-detect labs, the fentanyl crisis never would have reached its present proportions.

Were commentators less concerned with the opioid crisis’s utility to their pet causes and more concerned with the realities of that tragedy, they would spend less time waxing lyrical about the betrayal of blue-collar whites and more time demanding more public funding for medication-assisted treatment and other harm-reduction policies.

It seems possible that the story of the teen-suicide crisis will come to resemble that of the opioid epidemic. Perhaps in a few years, we will find the U.S. economy’s structural inequities had less to do with American adolescents’ declining mental health than did the sudden introduction of a highly addictive (digital) technology.

The case for blaming rising rates of teen mental distress on social media has its strengths. Not only is this hypothesis congruent with the timing of the teen mental-health crisis, but it is also harmonious with its skewed gender implications. Studies examining the adverse effects of social-media use have routinely found that girls are more likely to suffer psychologically from the pastime than boys are. Researchers at Brigham Young University tracked the media diets and mental health of 500 teens through annual surveys from 2009 to 2019; they found that social-media use had little effect on boys’ suicidality but that girls who used such platforms at least two hours a day when they were 13 were at “a higher clinical risk for suicide as emerging adults.”

This same gender gap has surfaced in several other studies of the relationship between social-media use and depression in the United States, the United Kingdom, and Canada. Most of this research has merely established a correlation between girls’ social-media use and mental-health problems, which leaves open the possibility that causality runs in the opposite direction: Rather than habitual social-media use rendering girls depressed, depression may render girls habitual social-media users.

But some studies have generated evidence of causality. In British Columbia, access to high-speed internet expanded in recent years, reaching some neighborhoods before others. Researchers found that when areas secured access to it, their rates of social-media usage went up. They then found that the introduction of high-speed internet to a locality was associated with a sharp increase in mental-health diagnoses among girls.

Yet several studies have failed to document a tight correlation between social-media use and depression, and adolescent mental health has not uniformly declined everywhere that Twitter, Instagram, and Facebook have attracted mass user bases. It’s possible that Lorenz’s general intuitions may someday be validated. Perhaps we’ll eventually find that social media (or some other exogenous force) drove up adolescent mental illness in nations with high levels of inequality but not in those with more equitable political economies. Or maybe we’ll conclude that social media plus abundant personal firearm ownership is a lethal combination.

What we can say with confidence is that we do not know and that we owe it to those suffering in our mental-health crisis to be honest about that fact. In the absence of dispositive data on what’s causing American adolescents to develop depression and suicidal ideation in large numbers, we should concentrate on helping them once they contract such illnesses. Increasing funding for mental-health services is conducive to that aim. Telling suicidal teenagers they would “have to be delusional to look at life” and feel any amount of “hope or optimism” probably isn’t.