In January, I raised my hourly rate to $300 before wondering if I could get away with charging anything at all.

I teach high-schoolers how to write college essays, helping students claw their way out of hackneyed bildungsroman and into deftly tuned narratives. The clients (and their parents) can be a lot to handle, but my results ensure that I have a new cluster of rising seniors every summer. And the service I provide is in perpetually high demand among the moneyed and desperate private-school crowd.

Recently, though, the rise of ChatGPT had me questioning how much longer this comfortable arrangement could last. I started to fear obsolescence when I heard about uncannily passable AI-generated letters of recommendation and wedding toasts — forms of writing not a million miles away from my specialty. So, in an attempt to get to know my new enemy — and gauge whether I was still employable — I paid $20 for access to the “more creative” GPT4.

Nervously, I prompted ChatGPT with a series of bullet points and fed it what anyone who has applied to college in the past 15 years knows is the formula for the Common Application personal essay: “Write 600 words including a catchy hook to draw the reader in, a conflict, and a thoughtful self-reflection.”

ChatGPT didn’t even take a beat to process my outline; it spat out an essay as fast as I could read it. Its first draft (about a freak accident washing dishes leading to a lesson in the power of fear) was unsettlingly well-composed, but stiff in a way that kept it from resonating emotionally (often a problem with student-generated drafts, too). ChatGPT wrote, “I was horrified, not just at the sight of my own blood but also at the thought of needing stitches. In that moment, I was transported back to the time when I was a child and I got my first stitches.” Not bad, but not exactly transcendent, either.

The next big test: Could this thing incorporate feedback? I replied that the essay was “a little formal, can you make it more conversational?” Done. ChatGPT added a few “you see”s and began several sentences with “So.” The essay was suddenly more casual: “The experience taught me that fear, no matter how powerful it may seem, can be overcome with perseverance and determination” became “But eventually, I realized that this fear was holding me back and preventing me from enjoying something that brought me so much joy.”

With these small tweaks, ChatGPT’s effort was already significantly better than most first drafts I come across. I tried to throw it off with something random, adding, “My favorite comedian is Jon Stewart. Can you incorporate that into the essay?” ChatGPT wrote three new sentences that explained how Stewart “helped me see the lighter side of things and lifted my spirits.”

I told it to be funny. It tried. I corrected it, “No, that’s too corny, make it more sarcastic.” It revised, “And let’s face it, what’s a little scar compared to the joy of a rack of clean dishes?” Then I wrote, “Add in my high-achieving older brother who I always compare myself to a classic Common App essay character as a foil.” I specified that the brother breaks his collarbone around the same time the main character has to get stitches. ChatGPT came up with this: “And here I was, feeling guilty for even complaining about my measly scratch when his pain was so much worse. It was like a twisted game of ‘whose injury is more severe?’” I watched ChatGPT revise (in seconds) the amount of material it typically takes students (with my help) hours to get through. Intrusive thought: Even if I lower my rates, there won’t be any demand.

And then I slowed down, stopped panicking, and really read the essay.

I began noticing all the cracks in it. For one thing, ChatGPT was heavy on banal reflections (“Looking back on my experience…”) and empty-sounding conclusions (“I am grateful for the lessons it taught me”) that I would never let slide. I always advise students to get into specifics about how they’ve changed as people, but ChatGPT relied on anodyne generalities. Most importantly, it couldn’t go beyond a generic narrative into the realm of the highly specific. (A good student essay might have, say, detailed how Stewart’s Mark Twain Prize acceptance speech helped them overcome a fear of public speaking.)

AI is also just lazy. There’s nothing wrong with an occasional transitional phrase, but using “Slowly but surely,” “Over time,” “Looking back on my experience,” and “In conclusion” to lead off consecutive paragraphs is only okay if it’s your first time writing an essay. Leading off a conclusion with “In conclusion” means you’re either in sixth grade or satisfied with getting a C.

While the essay technically met every criterion I set (hook, conflict, self-reflection), it also failed the main test I pose to students: Have you ever read a version of this story? The answer here was most definitely “yes.” It’s uncanny how well ChatGPT mimicked the contrived essay that I’m paid to steer kids away from — the one you’d be shown as an example of what not to do in a college-essay seminar. It reads like a satire of one of those “the ability was inside me all along” or “all I needed to do was believe in myself/be true to myself/listen to my inner voice” narratives rife with clichés and half-baked epiphanies. ChatGPT’s basic competence led me to overlook the middling quality of its execution. It’s the same disbelief-to-disillusionment arc ChatGPT has inspired elsewhere — take the viral AI travel itinerary that seemed perfect until people pointed out some pretty glaring (and possibly dangerous) errors.

Credit where it’s due. I expend a lot of effort translating overwritten, clunky, and generally unclear student prose. ChatGPT excels in writing cleanly — if flatly. It’s great at producing simple, informational text from a set of data. Creating a rule book for Airbnb guests, writing a “help wanted” ad, drafting an email with details for a surprise party: These are perfect cases for ChatGPT right now. From this mess, ChatGPT would translate the raw information into a block of concise text that wouldn’t need style, voice, or flair to be successful. If you want to share facts in a digestible and clear way, ChatGPT is your guy.

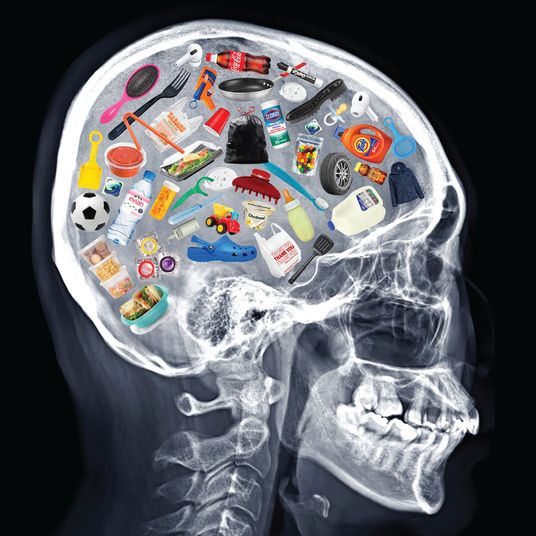

But ChatGPT failed hardest at the most important part of the college essay: self-reflection. Literary agent Jamie Carr of the Book Group describes great storytelling as something that makes “connections between things and ideas that are totally nonsensical — which is something only humans can do.” Can ChatGPT bring together disparate parts of your life and use a summer job to illuminate a fraught friendship? Can it link a favorite song to an identity crisis? So far, nope. Crucially, ChatGPT can’t do one major thing that all my clients can: have a random thought. “I’m not sure why I’m telling you this” is something I love to hear from students, because it means I’m about to go on a wild ride that only the teenage brain can offer. It’s frequently in these tangents about collecting cologne or not paying it forward at the Starbucks drive-thru that we discover the key to the essay. I often describe my main task as helping students turn over stones they didn’t know existed, or stones they assumed were off-limits. ChatGPT can’t tap into the unpredictable because it can only turn over the precise stones you tell it to — and if you’re issuing these orders, chances are you already know what’s under the stone.

In the South Park episode “Deep Learning,” Clyde and Stan use AI to compose thoughtful, emotionally mature text messages to their girlfriends. When Bebe asks if she should cut her hair, Clyde (via ChatGPT) replies, “You would look great with any length of hair. Trying a new look could be fun.” Only a fourth-grader (no offense, Bebe) would buy that the message is authentic. When Stan’s girlfriend Wendy wants to repair their relationship, Stan responds, “We can work things out if you’re willing. I still believe we can make this work. Let’s not give up on each other.” ChatGPT is credited as a writer in this episode, though I wouldn’t be surprised if the messages were punched up to reach this level of dullness. But the style speaks to something I noticed when I asked ChatGPT to write a short story: It makes everything sound like an unfunny parody. A parody of an attentive boyfriend. A parody of a short story. A parody of a college essay.

AI may supplant me one day, but for now, ChatGPT isn’t an admissions-essay quick fix. It’s not even a moderate threat to the service I offer. And while there are plenty of problems with a system in which the ultra-elite pay someone like me to help package insight into a few hundred words, ChatGPT doesn’t solve any of them. Perhaps one day, we’ll figure out a fairer way forward. For now, I’m quite relieved to report that my expertise is still definitely worth something — maybe even more than $300 an hour.