“Big Tech is trying to interfere in the election AGAIN,” says Donald Trump Jr. “They’re trying to memory hole it,” writes Chaya Raichik, a.k.a. LibsofTikTok. Texas congressman Chip Roy responds, “Can verify.” Roger Marshall, a Republican senator from Kansas, claims he’ll be making an “official inquiry.” Elon Musk asks the question on everyone’s mind: “Election interference?”

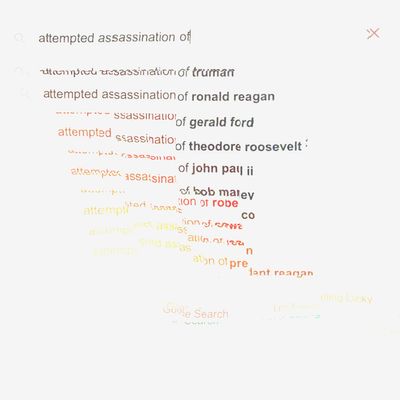

The fix is in, they’re not even hiding it anymore, this one goes all the way to the top, etcetera. We’re talking, of course, about Google Search auto-complete:

This is real, and you can try it yourself: Typing something like “the attempted assassination” will produce a bunch of historical suggestions, and adding “of Donald” will eliminate suggestions altogether. Google, a company whose attempts at avoiding backlash reliably produce backlashes of their own, responded to unsatisfiable critics with an unsatisfying statement: “Our systems have protections against Autocomplete predictions associated with political violence, which were working as intended prior to this horrific event occurring. We’re working on improvements to ensure our systems are more up to date.”

The company is referencing its public auto-complete policies, which claim that it has “systems in place” to prevent the appearance of “health-related predictions,” “sensitive and disparaging terms associated with named individuals,” predictions of “serious malevolent acts,” and “elections-related predictions” (which the company describes as suggestions that might be interpreted as taking “a position for or against any political figure or party” or making “a claim about the participation in or integrity of the electoral process”). Google has published these policies since at least 2018, and the rule about election-related content has been in place since before the 2020 election. What these posts and the many partisan news stories covering them don’t mention is that Google prevents a wide range of election-and-politics-related suggestions. It’s true that Google won’t suggest assassination-attempt-related results for Donald Trump. It’ll also stop suggestions if you start to type “Donald Trump felon” or “Donald Trump conviction.” In favor of the conspiracy for Democrats, it won’t complete for “Joe Biden dement …” Against the conspiracy, it won’t even complete “Kamala Harris campa …”

It would be a mistake to take these posts too literally, of course. Don Jr. isn’t trying to make factual arguments about how Google works and isn’t interested in putting together an evidence-based case for tech bias. He’s affirming a preexisting narrative in the context of overlapping ideological and political campaigns. Google is a useful villain.

It would likewise be a mistake to suggest that Google’s policies are coherent, sensible, or unbiased, whatever that would mean. Google is hugely influential, and the fact that auto-complete won’t fill in a massive recent news event is weird. And while it’s insignificant on its own (you can search for all this stuff and get comprehensive results, so sort of the opposite of a cover-up) it provides a useful map for understanding the strange, shrill, potent, let-me-speak-to-your-manager tech-platform politics of the 2024 election and beyond.

Auto-complete has been a pain in Google’s ass since the company turned it on by default in 2008. In its early years, it was a window into relatively current search trends, suggesting incorrect and occasionally libelous material (the company was sued, with some success, numerous times). By 2013, auto-complete was a real if minor source of political outrage: Barack Obama was “a reptile,” “gay,” “an idiot,” and “a Muslim”; Joe Biden was “a moron,” “dead,” and “a dumbass”; while Ted Cruz was “Canadian,” “a moron,” and “crazy.”

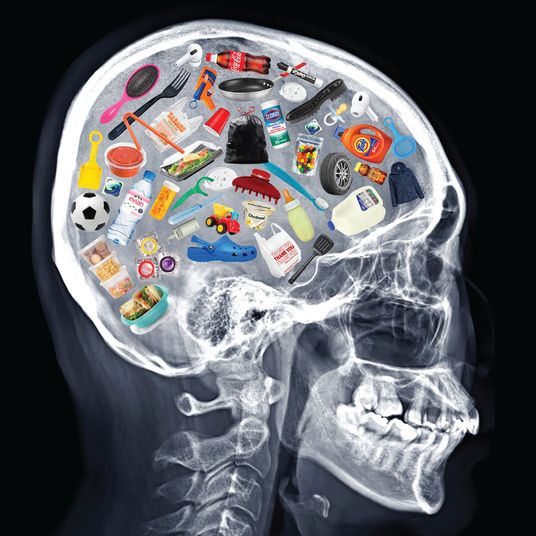

Auto-complete is, again, a minor feature in a major product. It’s nominally a tool for saving time, but from the start, it was also a fascinating portal into what other people were searching. It was also, in hindsight, a tool for amplifying dicey material: While the suggestions are based on what Google’s customers are doing, Google is the one publishing them. It’s a vector through which, for example, a relatively small number of people searching for photos of a celebrity’s feet turns into Google actively suggesting that its users search for celebrity feet.

It doesn’t suggest this sort of thing anymore — censorship??? — and is generally pretty cautious these days about what it does. The stakes around tech censorship are understood to be much higher now, and the discourse around them has been thoroughly operationalized. By the 2020 election, search suggestions were refusing a wide range of political content; in 2024, after bruising stretches as scapegoats for liberals and conservatives, big tech companies have been attempting to depoliticize their products, which is both sort of impossible and, as seen here, tends to bring attention to their undeniable importance.

In 2024, a Google auto-suggest backlash might feel rote, an auto-completing mini-scandal for the fully bought-in. But we should expect to see more of this — a lot more — in the next few months. Before the 2020 election, it was a common belief among liberals that social media had in some way swung the 2016 election for Trump, which was reputationally disastrous for big tech; going into the 2024 election, it’s conservative dogma that the 2020 election was in some way stolen by, or at least interfered with, by big tech companies. And while companies like Google would very much like to neutralize these perceptions, they can’t. They’re more influential than ever, and the previous main subject of both earnest and bad-faith accusations of bias — the news media — is basically niche in comparison to the big platforms, whose decisions are rightly if not always fairly interpreted in editorial and/or conspiratorial terms. For certain people, including at least one of the candidates, the 2024 election is going to involve a lot of pointing at screens and screaming, “Mods???”

This explains why Google sometimes retreats into willful ignorance. But charges of bias have also been given new life by big tech’s charge into AI, which takes the risk inherent in search auto-complete — Google is telling me to search for something I hadn’t thought of before — and turns it into the entire product. It’s generally glib to say that AI is just fancy auto-complete, but in this sense, the comparison is apt: If search suggestions are fertile ground for accusations of bias, what does that mean for chatbots, which just say things in an authoritative voice, under Google’s brand? As Google is already discovering: nothing particularly good.